The hardware infrastructure lies at the bottom of the architecture. The hardware infrastructure includes servers, storage systems, and networking hardware. The actual hardware lies out of the scope of the MIKELANGELO project.

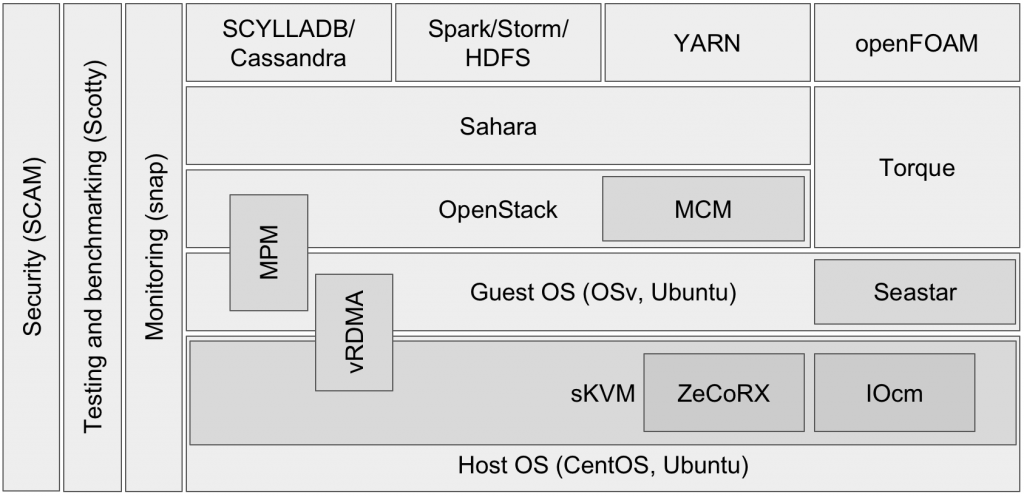

Above the bare metal layer lies the virtualisation layer, which may include an operating system and a hypervisor. In the virtualisation layer, the project is developing an improved hypervisor, called sKVM. Here, the engineers are focusing on reduction of the I/O overhead and improvements of the virtualisation security. Furthermore, this layer incorporates integration of the RDMA into sKVM, allowing for fast and flexible communication between VMs. This layer represents the core of the MIKELANGELO project, since very fast I/O in VMs is one of the project’s main promises. The architecture leverages fast VM I/O throughout the whole stack. I/O is that important since data processing is one of the major tasks of virtual infrastructures. Moreover, data growth even outstrips the gains in networking and computing performance.

OSv represents the third layer in MIKELANGELO’s architecture. OSv is a new operating system built from scratch specifically for cloud computing. In this project, engineers are extending OSv to run HPC and big data applications. Furthermore, the engineers are integrating RDMA with OSv, and improving application deployment originally via Capstan. However, Capstan was soon surpassed and now the new approach called LEET has been developed. It uses Capstan as one of the building blocks but improves it and adds package management, composition and OpenStack compatibility. OS’v major benefits are high performance, a low footprint, and full VM isolation through virtualization.

The cloud middleware comes next in the architecture. The project has briefly evaluated the cloud middlewares to find the best fit, to leverage sKVM and OSv. However, the final outcome is support of OpenStack (and its projects Nova, Heat, etc.), as this best serves the impact goals of the MIKELANGELO project.

On top of the cloud and virtualisation layer, big data and HPC applications serve as use cases. The big data applications target primarily Apache’s big data stack. The HPC use cases run a virtualisation-enabled update of the renowned Torque batch system, called vTorque. They execute OpenFOAM simulations and custom simulations of cancellous bones. Both use cases aim to allow customers to use clusters on demand with customised environments. At the same time the use case will offer top computational and I/O efficiency. Currently, big data and HPC applications typically do not use cloud computing, mostly because of the low I/O performance. However, both areas can benefit greatly from the cloud paradigm, since it offers a lot of flexibility.

Here we provide a summary about the project’s goals and approach, including basic background knowledge. The article further contains information how we are going to progress beyond the state of the art. The remainder of this post presents more details on MIKELANGELO’s technical merits in a bottom-up fashion according to MIKELANGELO’s architecture diagram.

A Hypervisor with Optimised I/O Processing: sKVM

MIKELANGELO introduces an improved version of the kernel-based virtual machine (KVM) hypervisor. To bring KVM into the context of virtualisation technology at large, we first provide an overview of virtualisation technology. Then we describe our advancements beyond the state of the art.

Hypervisors, such as KVM, execute and manage VMs. Traditionally those hypervisors fall into two different categories. The first category contains, the so called, type 1 hypervisors. These hypervisors are also known as bare-metal hypervisors. Type 1 hypervisors run directly on the hardware without any fully fledged operating system beneath the hypervisor. Examples for these hypervisors are VMWare ESX, Xen, and Hyper-V. Type 2 hypervisors run in user-space of a base operating system. Examples for type 2 hypervisors are Linux-VServer, Linux containers and BSD jails, OpenVZ, QEMU, and KVM. Nowadays, hypervisors such as KVM blur these boundaries, since they consist of kernel modules, which run in kernel mode.

MIKELANGELO will base its work on KVM. KVM is a popular hypervisor technology, which uses Linux as host system. KVM supports nearly arbitrary guest operating systems, has an open source license, and provides good performance. These features make KVM popular, especially in the context of cloud computing. For example, the vast majority of OpenStack-based clouds in production use KVM. However, KVM provides below-native performance. Although KVM offers near-zero overhead for compute virtualization, its I/O virtualization efficiency lies around 60-70%. Here I/O refers to network communication and to disk access.

In MIKELANGELO, engineers at IBM will improve KVM with regards to I/O performance. The performance improvements will come from a new I/O scheduler that will be transparent to the guest system. This I/O scheduler will allocate resources for the I/O activity of guests, thus for virtual I/O. Currently, such a virtual I/O scheduler does not exist. However, previous research by IBM on a software called Elvis promises good results with this approach.

A New Operating System for the Cloud: OSv

OSv is the preferred guest operating system in MIKELANGELO’s cloud stack. OSv is an operating system developed from scratch by the start-up Cloudius Systems. Cloudius, who are part of MIKELANGELO’s consortium, have developed OSv specifically for cloud computing. In the following paragraphs we describe the motivation behind OSv and then MIKELANGELO’s improvements to OSv.

Currently, clouds mostly run guest OSs with well-known operating system such as Ubuntu, Debian, and CentOS. Most of the time these guest systems use Linux as foundation. Some more specialised systems such as CoreOS are stripped-down versions of Linux. The downside of the Linux approach to Cloud guests lies in its inefficiency. Linux has not been developed specifically to be run as a guest OS in a cloud. Thus, Linux carries a lot of unnecessary baggage in form of legacy code that was intended for other purposes. This legacy codes leads to inefficiencies. These inefficiencies, in turn, become apparent in start-up times, lowered computational throughput, and disk image size.

Containers, such as Linux containers, BSD jails, and OpenSolaris zones, offer an alternative and a more lightweight approach to virtualisation. However, containers have multiple disadvantages. One disadvantage lies in the inherent difficulty to isolate containers well from the host operating system. This lack in isolation offers a vulnerability. Another major disadvantage of containers lies in the constraint of the operating system. A container offers controlled access to the host operating system. Thus, it is not possible to run a Windows host within a Linux container. Currently, containers are very popular as base-technology for Docker, which offers quick deployment of applications and their dependencies. Consequently, one would ideally use full virtualisation with a low footprint on resources. OSv aims to deliver exactly this small footprint, as far as the guest operating system can influence the performance.

MIKELANGELO will improve OSv in three major areas: general efficiency, application support, and application packaging. To increase the general efficiency, engineers will improve the SMP load balancer in the scheduler, on one hand. On the other hand, the engineers will reduce the boot time and footprint on host system resources. MIKELANGELO will improve application support, by adding additional unmodified executable formats, such as PIE, standard executables, and statically-linked executables. To provide further application compatibility, OSv will support additional functions in the Linux/Glibc ABI. Finally, MIKELANGELO will improve some function implementations in OSv, such as epoll(), to support more runtime environments, such as ruby, go, and node.js. To improve application packaging and deployment, MIKELANGELO will extend Capstan. Capstan is a system for application deployment, which resembles Docker. In contrast to Docker, Capstan uses OSv in a fully virtualised environment. MIKELANGELO will furthermore integrate Capstan with the cloud layer to deploy applications with convenient interfaces.

Seastar

The first goal of the OSv kernel is to run existing Linux software, and to reduce the overheads of the Linux APIs which such software uses. For example, because OSv runs the kernel and the single application in one address space, system calls are cheaper in OSv (just a normal function call) and so are context switches. OSv also re-implemented the networking stack to be more efficient on SMP machines (fewer locks and data sharing between cores), but still kept the familiar socket APIs for applications.

However, experiments showed that what is now holding back application performance, especially on modern multi-core machines, is not the implementation of the kernel’s APIs, but the very design of this API and the way applications are written to use it. For example, the Linux socket API allows a socket to be accessed by many cores (therefore requiring locks), requires that the kernel copy data to/from the user, and so on. Applications written in traditional multi-threaded techniques scale poorly to many cores, as locks, cache line bouncing, and thread context switches take their toll.

For this reason, we designed Seastar - a new open-source C++ API for writing very efficient server applications. Seastar’s API is completely asynchronous (based on the concept of “futures”), so its networking or disk APIs do not require any data copies and minimizes moving of data between cores. Its scheduler multiplexes tiny stack-less “continuations” on a single thread per core, significantly reducing context switch costs, even compared to OSv’s cheap context switches. The Seastar API supports building share-nothing applications - applications where each core handles its own section of memory, without using any locks and only a minimal amount of explicit communication between cores. Such applications are much more efficient than traditional multi-threaded lock-and-shared-data, especially as the number of cores grow; We have seen a Seastar-based reimplementation of Memcached achieve 4 times the throughput of the original Memcached implementation, and a Seastar-based reimplementation of Cassandra achieve 10 times the throughput of the original.

Fast and Flexible Communication in the Cloud: RDMA-based Shared Memory

RDMA-based shared memory offers a flexible and highly performant way for VMs to communicate with each other. VMs communicate a lot with each other, since they often host different parts of distributed services and service components. VM communication becomes important, especially in the context of a one-application-per-VM model, as envisioned with OSv. In MIKELANGELO, with OSv as de facto application container, inter-process communication (IPC) works via inter-VM communication. In the following paragraphs, first we describe methods for inter-VM communication and the state of the art for RDMA. Then, we describe how MIKELANGELO is going to advance RDMA technology.

If two VMs reside on the same host, they can use shared memory for IPC. This type of communication between VMs promises data transfers with the highest bandwidth and lowest latency. Implementations of inter-VM IPC use either MPI or sockets as interfaces. In both cases, one can use shared memory in the backend. Some implementations are even able to switch seamlessly to a TCP/IP-based communication, when remote VMs wish to communicate. Here, we refer to remote VMs as VMs that do not reside on the same host. Communication over the TCP/IP stack allows remote communication, however the TCP/IP stack incurs an extra overhead. This overhead stems from a complex software stack in the local and remote hypervisor.

RDMA is a low-latency and high-bandwidth communication alternative to TCP/IP. RDMA works with both, Infiniband and Converged Ethernet, as physical layer. However, most RDMA implementations push RDMA semantics and interfaces into the VMs, which complicates their driver and networking subsystem. Furthermore, when VMs on the same host communicate the hypervisor needs to copy memory, unnecessarily.

Nahanni, which uses KVM, provides an alternative mechanism for inter-VM communication that differs from MPI, sockets and RDMA. Nahanni provides shared memory access between VMs without any special abstraction in the VMs. Furthermore, Nahanni uses direct shared memory pools to provide scale-out for applications such as in-memory databases. However, Nahanni focuses on intra-host communication. NetVM, builds on Nahanni to combine shared memory communication with network processing. To provide an efficient implementation NetVM maps and forwards network packets between VMs on the same host via shared memory.

MIKELANGELO aims to advance the state of the art by providing netchannels for TCP/IP, improved communication APIs, RDMA integration with OSv, and para-virtualised drivers for legacy applications. Netchannels implement the socket API with TCP/IP, which works more efficiently and stable than the traditional TCP/IP stack. MIKELANGELO’s new communication APIs will provide more efficient I/O, zero-copy, and improved cache-efficiency. The integration of RDMA within OSv will feature a lightweight RDMA-like communication interface. To support legacy applications, MIKELANGELO will develop para-virtualised I/O device drivers, which will use RDMA as a backend. These para-virtualised devices can then take advantage of zero-copy and lightweight abstraction.

Improved Security for Virtual Machines

Clouds co-host VMs for multiple tenants. Thus MIKELANGELO needs to take care of existing vulnerabilities and new ones arising in sKVM. Security poses a major concern in virtualised environments, in cloud computing, and in co-hosted, multi-user, and multi-tenant systems in general. MIKELANGELO’s architecture needs to ensure security in depth by respecting security issues in the host OS, hypervisor, and in the cloud middleware. The host offers an attack surface via side channel attacks. The hypervisor offers an attack surface via VM escapes and shared memory. In the following paragraphs, first, we describe the main security concerns that we need to deal with. Then, we describe how we intend to cope with those security concerns.

With a side channel attack, a malicious VM can try to access information on other tenant virtual machines, by various side channels. The most notable side channel uses timing attacks on a cache. In timing attacks malicious VMs exploit the fact that a cache is a shared resource. Shared resources, in turn, may leak information about co-located processes. State-of-the-art systems do not protect against co-tenancy side channel attacks beyond providing physical VM isolation on a physical host. VM escape exploits refer to ways for a malicious VM to escalate its privileges. An escaped VM executes with the same permissions as the hypervisor itself. Thus an escaped VM can read or modify the data of other VMs, which run on the same physical host. Such VM escapes do occur in practice, which leads to exploits, such as Cloudburst in VMware and Virtunoid in KVM. A hypervisor that allows shared memory between VMs, either remotely by RDMA or locally by ivshmem, may provide additional attack vectors. Such attacks may include eavesdropping, traffic modification or buffer overflow attacks over a remote connection and uncontrolled DMA access on the same physical host. Suggested mitigations include IPsec to protect RDMA traffic, strict filtering and bounds checking of incoming RDMA traffic and using hardware support for I/O sharing such as Intel’s V-T technology.

Security aspects of VM placement and inter-VM traffic routing have so far received relatively little attention. In particular, there are apparent trade-offs between mechanisms that focus on performance and approaches that take security concerns into account. For example, to improve performance one might co-locate closely interacting VMs, in order to reduce latency. However, to improve security the goal might be to strive for isolation of potentially co-harmful VMs.

MIKELANGELO will reduce the attack surface of existing VM technology and of new features in sKVM. To mitigate side channel attacks, MIKELANGELO will investigate mechanisms, on the hypervisor level. This approach will mitigate the effects of sharing physical resources with a malicious VM. Thus, MIKELANGELO will reliably block known side-channels with the minimal possible effect on performance. The security system will provide this protection only to users that specifically require it. MIKELANGELO will mitigate the effects of VM escapes by leveraging the network and other cloud components. To provide multi-tiered security, MIKELANGELO will incorporate network security with VM placement and cloud monitoring.

Improved Scalability, Usability, and Security in the Cloud: Integration of A Cloud Middleware with sKVM and OSv

MIKELANGELO will integrate the advancements from the virtualisation layers with the cloud layer. The cloud layer consists of the infrastructure layer and the platform layer. This integration will make fast I/O, inter-VM communication, and improved security usable in cloud computing in practice. The following paragraphs describe how MIKELANGELO will extend the infrastructure layer, the platform layer, and how it will integrate monitoring in the stack.

In the infrastructure layer MIKELANGELO will combine a cloud middleware to use sKVM for virtualisation in combination with OSv as preferred guest OS. MIKELANGELO will extend a cloud middleware to incorporate the security considerations discussed in the previous section. This integration work primarily concerns itself with high performance and scalability. The work on sKVM and OSv provides the potential for high performance and improved scalability and elasticity. To harness this potential, MIKELANGELO will need to integrate those technologies seamlessly into the cloud middleware. New bottlenecks will arise in the cloud middleware, which do not surface without sKVM and OSv. Engineers at GWDG will identify those bottlenecks and work to resolve them. Resolving bottlenecks and improving security in the cloud layer relates to resource allocation problems. Thus, GWDG engineers will research resource management algorithms, which satisfy security, privacy, performance end energy constraints. Furthermore, these algorithms will adapt to different circumstances. The cloud bursting module will feature this adaptivity, to detect cloud bursts quickly.

In the platform layer, MIKELANGELO will integrate OSv’s Capstan for simple application deployment. Capstan resembles Docker. However, Docker uses Linux containers instead of full virtualization. Capstan will instead use OSv and sKVM, to deploy applications easily. In the cloud layer, MIKELANGELO will provide a web-based graphical user interface to deploy pre-packaged applications. Furthermore, the user interface will allow to manage and monitor those applications. The platform layer will also feature a simple and easy cloudification of applications based on Capstan. Thus, the application management component in the cloud layer will provide a reduced notion of a platform layer.

MIKELANGELO will integrate monitoring as a cross-sectional concern in the cloud layer. This integration builds on previous work from Intel. MIKELANGELO will work on currently open issues such as to research methods to describe metrics in a machine readable way. Metric descriptions need to cover aspects such as metric processing, dimensionality, and the origin of data. Furthermore, MIKELANGELO will integrate monitoring metrics from all layers, starting with sKVM and progressing up to custom applications running via Capstan. To identify metrics that influence performance, MIKELANGELO will deploy an automated analysis tool, developed by Intel.

Use Cases: Big Data, HPC, and Cloud

Four use cases in the three areas big data, HPC, and cloud computing drive the requirements, evaluation, and verification of MIKELANGELO’s stack. One use case uses MIKELANGELO for applications in the context of big data. There are two use cases in the context of HPC. The fourth use case covers cloud bursting. We will introduce all four use cases briefly in the following paragraphs.

The big data use case will deploy a big data platform, such as Apache Hadoop, on MIKELANGELO’s cloud stack. Currently, big data applications do not lend themselves for execution on virtual infrastructure due to the high I/O overhead of current-generation VMs. However, running big data platforms in a cloud environment would have many benefits. Two important benefits are flexibility and agility. Flexibility means that in a virtualised big data cloud users could use a range of custom tools to run their analyses on large data sets. Agility means that users can deploy applications as required and when required onto the infrastructure. In this use case, we will integrate a big data platform that we will use MIKELANGELO’s cloud stack. Thus, we aim to provide a productive big data cloud. Furthermore, in MIKELANGELO we plan to support users to port their applications to a big data framework.

The first use case in high-performance computing deals with the simulation of cancellous bones. These simulations allow surgeons to develop better prostheses, such as hip-replacements. In practice, such a simulation increases the life-time of a hip replacement from ten years to multiples decades. Currently, programmers need to adapt such specific simulations to specific hardware and software, which includes the operating environment. This environment includes the operating system and available interfaces and programming libraries. Virtualisation will be a helpful tool, to allow users to provide their own flexible environment in VMs. However, currently virtualisation performs too poorly for I/O operations, to use virtualisation for HPC. In MIKELANGELO, HLRS will port the cancellous bones simulation to OSv. Furthermore, HLRS will run OSv on sKVM with RDMA on an HPC cluster. This setup will give the users of the cancellous bones simulation, such as clinics, a way to run their simulation on a variety of computers. These computers can then easily involve, otherwise idle machine on users’ premises.

The second HPC use case runs simulations in computational fluid dynamics with OpenFOAM. A Slovenian aircraft manufacturer called Pipistrel, uses these simulations to design new aircrafts. For Pipistrel it does not make sense to run their own HPC cluster, since their engineers require these simulations only periodically in some phases of aircraft design. Renting time on an HPC cluster also does not make sense, since Pipistrel’s workflow requires a close interaction with the application. Often engineers run, evaluate, and then re-run designs with different parameters. Deploying OpenFOAM in a normal cloud built with the usual hardware setup also does not suffice, because OpenFOAM requires a fast interconnect. Thus, in MIKELANGELO, Huawei and Pipistrel will port OpenFOAM to OSv and combine OpenFOAM with sKVM and RDMA. Furthermore, Huawei and Pipistrel will develop tools that will allow engineers to follow an agile workflow to quickly evaluate new aircraft designs.

The cloud bursting use case aims to deal with bursts of requests of internet services better. Cloud bursts are an internet phenomenon that happens regularly. A cloud burst appears when a large number of users suddenly request some resources or when they try to use a service. Then, scaling mechanisms usually deploy new VMs to cope with the high demand. However, often such a burst reaches the limits of the infrastructure very quickly. There are two important metrics that drive how well a cloud handles cloud bursts: transfer times for VM images and boot time for VMs. OSv shines in both categories. Since Cloudius Systems has designed OSv from scratch, the operating system’s VM image has a size of only a few MBs. Start-up times of OSv usually lie under a second. In this use case, Cloudius will take advantage of OSv and fast I/O with sKVM and RDMA, to distribute applications very quickly. In specific, these applications will carry state, which will be transferred to the freshly deployed VMs.

Conclusions

MIKELANGELO aims to disrupt cloud computing across the whole virtual infrastructure stack. This stack covers virtualization technology, operating systems, cloud middleware, big data stacks, and high performance computing. We work to improve the I/O performance of virtualised infrastructures and applications running on those infrastructures. MIKELANGELO’s technical key results will be an improved version of KVM, an optimised operating system for the cloud, new RDMA methods, improved security for VMs, and new application deployment methods. Furthermore, MIKELANGELO will apply those advancements to cloud computing and HPC. Thus, our project covers the whole software range of the modern computing stack for a broad set of use cases. These use cases span the applications in the fields of big data, HPC, and cloud computing.