Para-virtualization has been commonly used in virtualized environments to improve system efficiency and to optimize management workloads. In the era of Performance Cloud Computing and Big Data Use Cases, cloud providers and data centers focus on developing paravirtualization solutions that provide fast and efficient I/O. Thanks to its nature of high bandwidth, low latency and kernel bypass, Remote Direct Memory Access (RDMA) interconnects are now widely adopted in HPC and Cloud centers as an I/O performance booster. To benefit from these advantages that RDMA offer, network communication supporting InfiniBand and RDMA over Converged Ethernet (RoCE) must be made ready for the underlying virtualized devices.

To enable such communications for virtualized devices, experts at Huawei’s European Research Center (ERC) Munich are developing new para-virtual device drivers for RDMA-capable fabrics, called virtio RDMA (vRDMA). Our vRDMA solution aims to disrupt the overhead barrier preventing HPC Cloud adoption, enabling HPC applications to run with a performance comparable to bare metal, yet enjoying all the benefits of the Cloud: agility, cost-efficiency, flexibility, high-bandwidth and low-latency for I/O.

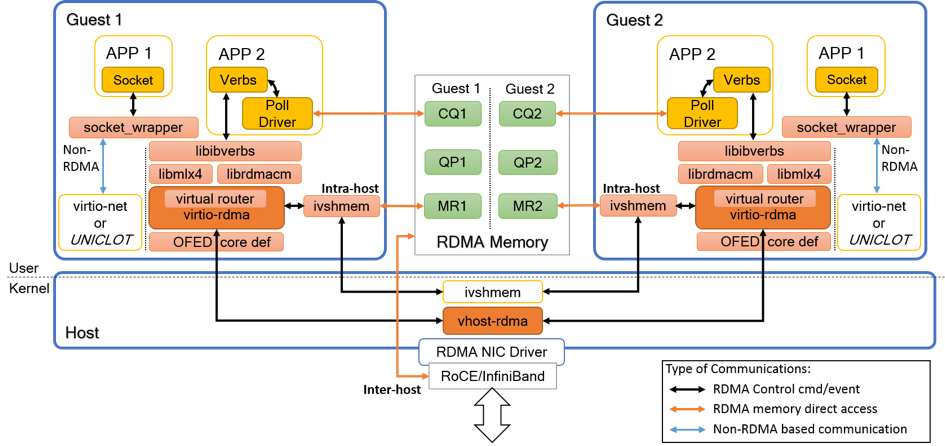

For this project we aimed at developing techniques and mechanisms accelerating the virtual I/O and improving the scalability of multiple virtual machines running on a multi-core host , see figure 1. We proposed three prototypes of vRDMA solution, addressing different hardware requirement and needs. Prototype develops vRDMA solutions that support socket based API. Prototype II aims to support guest applications that directly use RDMA verbs. Prototype III will focus on combining the two prototypes to support socket based applications to use Rsocket protocol. Moreover, we looked into Inter VM Shared Memory (IVSHMEM) solutions, and presents our findings and technical considerations.

Our vRDMA solutions not only provide minimized overhead for conventional socket-based interfaces, but also enable programming models relying on RDMA (i.e. InfiniBand verbs API). As a result it reduces the performance overhead incurred by virtualization, makes Cloud and High Performance Computing significantly more efficient, hence facilitating Big Data uses cases to run in a virtualized HPC environments. Small Medium Enterprises (SMEs) and companies who are currently unable to deploy their own HPC infrastructure will benefit from our solution and be able to explore and scale their workloads to cloud providers, thus accelerating time to market and improving competitiveness.

Figure 1. Final design of vRDMA prototypes.

Types of interconnects we support:

- InfiniBand

- RDMA over Converged Ethernet

Our vRDMA solution supports the following software configuration on guest/host side:

- Ubuntu 14.04

- DPDK 2.1.0

- Open vSwitch 2.4.0

- QEMU 2.3.0

- libvirt 1.2.19

Guest

- Ubuntu 14.04 / OSv

MIKELANGELO Resources

Reports

- D2.13 The First sKVM hypervisor architecture

- D2.16 The First OSv Guest Operating System MIKELANGELO architecture

- D2.20 The intermediate MIKELANGELO architecture

- D3.1 The First Super KVM - Fast virtual I/O hypervisor

- D4.1 The First Report on I/O Aspects

- D4.5 OSv-Guest Operating System- Intermediate Version

Demo Video and How-to