ZeCoRx - Zero Copy Receive

An optimized receive path for Virtual Machines served by virtio

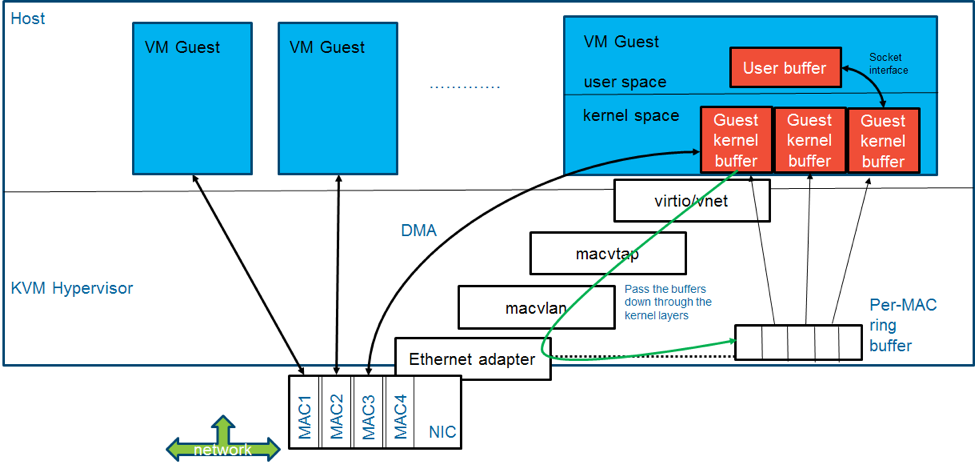

In the KVM hypervisor, incoming packets from the network must pass through several objects in the Linux kernel before being delivered to the guest VM. Currently, both the hypervisor and the guest keep their own sets of buffers on the receive path. For large packets, the overall processing time is dominated by the copying of data from hypervisor buffers to guest buffers.

Some Linux network drivers support zero-copy on transmit (tx) messages. Zero-copy tx avoids the copy of data between VM guest kernel buffers and host kernel buffers, thus improving tx latency. Buffers in a VM guest kernel for a virtualized NIC are passed through the host device drivers and DMA-d directly to the network adapter, without an additional copy into host memory buffers. Since the tx data from the VM guest is always in-hand, it is quite straight-forward to map the buffer for DMA and to pass the data down the stack to the network adapter driver.

Zero-copy for receive (rx) messages is not yet supported in Linux. A number of significant obstacles must be overcome in order to support zero-copy for rx. Buffers must be prepared to receive data arriving from the network. Currently, DMA buffers are allocated by the low-level network adapter driver. The data is then passed up the stack to be consumed. When rx data arrives, it is not necessarily clear a-priori for whom the data is designated. The data may eventually be copied to VM guest kernel buffers. The challenge is to allow the use of VM guest kernel buffers as DMA buffers, at least when we know that the VM guest is the sole consumer of a particular stream of data.

One solution that has been tried is page-flipping, in which the page with the received data is mapped into the memory of the target host after the data has already been placed in the buffer. The overhead to perform the page mapping is significant, and essentially negates the benefit we wanted to achieve by avoiding the copy (Ronciak, 2004). Our solution requires the introduction of several interfaces that enable us to communicate between the high and low level drivers to pass buffers down and up the stack, when needed. We also need to deal with the case when insufficient VM guest buffers have been made available to receive data from the network.

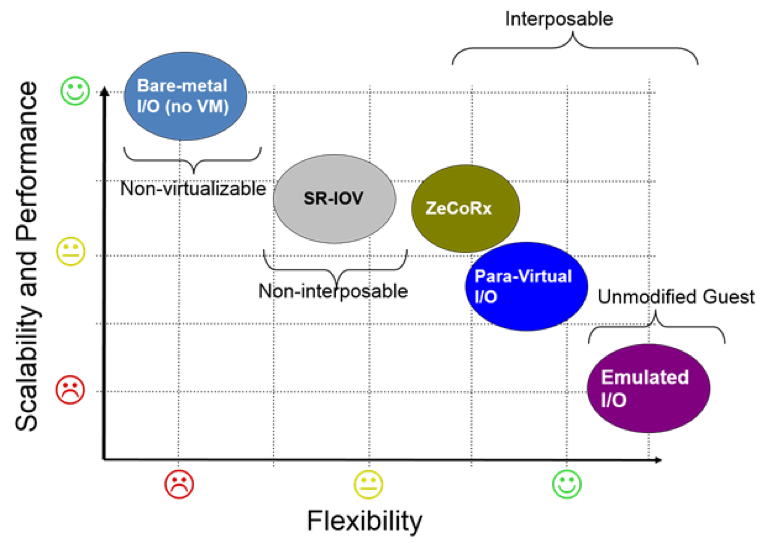

Expected performance benefits of the proposed solution are such that ZeCoRx technology will be able to achieve general case IO performance similar to that of VMs served by direct assignment methods while allowing full span of virtualization benefits similarly to VMs served by widespread virtualization methods.

ZeCoRx technology has been published at several opportunities throughout 2017. First, in May the design published as an extended abstract and a poster at SYSTOR’17. Then the team held a specialized BoF session at the Linux Plumbers Conference in September. In October, the feature was presented at KVM Forum to Linux developers community. At all occasions, there was community and industry interest that have brought about fruitful liaisons and collaboration opportunities.

Soon after the design was finalized around May 2017, the feature went to implementation by the IBM MIKELANGELO team with the goal to validate the architecture. During this phase multiple technical obstacles were discovered and resolved. The first stable working prototype was ready in December 2017, competing the architectural validation for the ZeCoRx technology. The team is now working on performance validation. For this, we are stabilizing and tuning the implementation so the technology can be evaluated through a thorough performance evaluation plan the team has put together. The next step will be creating and upstreaming community patches so the technology can be put into use. In parallel with the upstreaming work, we plan a quality scientific publication to share the lessons learned and the results with the academic community. Upon community acceptance, we plan to popularize the feature in developer events. For this we are creating sufficient documentation, e.g. tutorials, man pages and usage guides. At the moment this material is available in MIKELANGELO deliverables and will be published after performance validation and upstreaming.